|

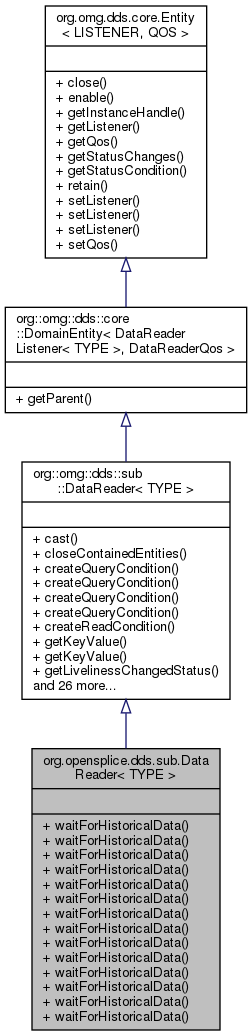

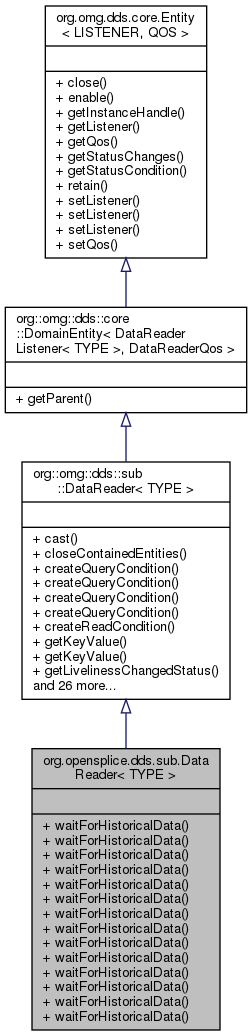

OpenSplice Java 5 DCPS

v6.x

OpenSplice Java 5 OpenSplice Data Distribution Service Data-Centric Publish-Subscribe API

|

|

OpenSplice Java 5 DCPS

v6.x

OpenSplice Java 5 OpenSplice Data Distribution Service Data-Centric Publish-Subscribe API

|

Public Member Functions | |

| public< OTHER > DataReader< OTHER > | cast () |

| Cast this data reader to the given type, or throw an exception if the cast fails. More... | |

| abstract void | close () |

| Halt communication and dispose the resources held by this Entity. More... | |

| void | closeContainedEntities () |

| This operation closes all the entities that were created by means of the "create" operations on the DataReader. More... | |

| QueryCondition< TYPE > | createQueryCondition (String queryExpression, List< String > queryParameters) |

| This operation creates a QueryCondition. More... | |

| QueryCondition< TYPE > | createQueryCondition (String queryExpression, String... queryParameters) |

| This operation creates a QueryCondition. More... | |

| QueryCondition< TYPE > | createQueryCondition (Subscriber.DataState states, String queryExpression, List< String > queryParameters) |

| This operation creates a QueryCondition. More... | |

| QueryCondition< TYPE > | createQueryCondition (Subscriber.DataState states, String queryExpression, String... queryParameters) |

| This operation creates a QueryCondition. More... | |

| ReadCondition< TYPE > | createReadCondition (Subscriber.DataState states) |

| This operation creates a ReadCondition. More... | |

| void | enable () |

| This operation enables the Entity. More... | |

| InstanceHandle | getInstanceHandle () |

| TYPE | getKeyValue (TYPE keyHolder, InstanceHandle handle) |

| This operation can be used to retrieve the instance key that corresponds to an instance handle. More... | |

| TYPE | getKeyValue (InstanceHandle handle) |

| This operation can be used to retrieve the instance key that corresponds to an instance handle. More... | |

| LISTENER | getListener () |

| This operation allows access to the existing Listener attached to the Entity. More... | |

| LivelinessChangedStatus | getLivelinessChangedStatus () |

| This operation obtains the LivelinessChangedStatus object of the DataReader. More... | |

| PublicationBuiltinTopicData | getMatchedPublicationData (InstanceHandle publicationHandle) |

| This operation retrieves information on a publication that is currently "associated" with the DataReader; that is, a publication with a matching org.omg.dds.topic.Topic and compatible QoS that the application has not indicated should be "ignored" by means of org.omg.dds.domain.DomainParticipant#ignorePublication(InstanceHandle). More... | |

| Set< InstanceHandle > | getMatchedPublications () |

| This operation retrieves the list of publications currently "associated" with the DataReader; that is, publications that have a matching org.omg.dds.topic.Topic and compatible QoS that the application has not indicated should be "ignored" by means of org.omg.dds.domain.DomainParticipant#ignorePublication(InstanceHandle). More... | |

| Subscriber | getParent () |

| QOS | getQos () |

| This operation allows access to the existing set of QoS policies for the Entity. More... | |

| RequestedDeadlineMissedStatus | getRequestedDeadlineMissedStatus () |

| This operation obtains the RequestedDeadlineMissedStatus object of the DataReader. More... | |

| RequestedIncompatibleQosStatus | getRequestedIncompatibleQosStatus () |

| This operation obtains the RequestedIncompatibleQosStatus object of the DataReader. More... | |

| SampleLostStatus | getSampleLostStatus () |

| This operation obtains the SampleLostStatus object of the DataReader. More... | |

| SampleRejectedStatus | getSampleRejectedStatus () |

| This operation obtains the SampleRejectedStatus object of the DataReader. More... | |

| Set< Class<? extends Status > > | getStatusChanges () |

| This operation retrieves the list of communication statuses in the Entity that are 'triggered. More... | |

| StatusCondition< DataReader< TYPE > > | getStatusCondition () |

| SubscriptionMatchedStatus | getSubscriptionMatchedStatus () |

| This operation obtains the SubscriptionMatchedStatus object of the DataReader. More... | |

| TopicDescription< TYPE > | getTopicDescription () |

| InstanceHandle | lookupInstance (TYPE keyHolder) |

| This operation takes as a parameter an instance and returns a handle that can be used in subsequent operations that accept an instance handle as an argument. More... | |

| Sample.Iterator< TYPE > | read () |

| This operation accesses a collection of samples from this DataReader. More... | |

| Sample.Iterator< TYPE > | read (Selector< TYPE > query) |

| This operation accesses a collection of samples from this DataReader. More... | |

| Sample.Iterator< TYPE > | read (int maxSamples) |

| This operation accesses a collection of samples from this DataReader. More... | |

| List< Sample< TYPE > > | read (List< Sample< TYPE >> samples) |

| This operation accesses a collection of samples from this DataReader. More... | |

| List< Sample< TYPE > > | read (List< Sample< TYPE >> samples, Selector< TYPE > selector) |

| This operation accesses a collection of samples from this DataReader. More... | |

| boolean | readNextSample (Sample< TYPE > sample) |

| This operation copies the next, non-previously accessed sample from this DataReader. More... | |

| void | retain () |

| Indicates that references to this object may go out of scope but that the application expects to look it up again later. More... | |

| Selector< TYPE > | select () |

| Provides a Selector that can be used to refine what read or take methods return. More... | |

| void | setListener (LISTENER listener) |

| This operation installs a Listener on the Entity. More... | |

| void | setListener (LISTENER listener, Collection< Class<? extends Status >> statuses) |

| This operation installs a Listener on the Entity. More... | |

| void | setListener (LISTENER listener, Class<? extends Status >... statuses) |

| This operation installs a Listener on the Entity. More... | |

| void | setQos (QOS qos) |

| This operation is used to set the QoS policies of the Entity. More... | |

| Sample.Iterator< TYPE > | take () |

| This operation accesses a collection of samples from this DataReader. More... | |

| Sample.Iterator< TYPE > | take (int maxSamples) |

| This operation accesses a collection of samples from this DataReader. More... | |

| Sample.Iterator< TYPE > | take (Selector< TYPE > query) |

| This operation accesses a collection of samples from this DataReader. More... | |

| List< Sample< TYPE > > | take (List< Sample< TYPE >> samples) |

| This operation accesses a collection of samples from this DataReader. More... | |

| List< Sample< TYPE > > | take (List< Sample< TYPE >> samples, Selector< TYPE > query) |

| This operation accesses a collection of samples from this DataReader. More... | |

| boolean | takeNextSample (Sample< TYPE > sample) |

| This operation copies the next, non-previously accessed sample from this DataReader and "removes" it from the DataReader so it is no longer accessible. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, Time minSourceTimestamp, Time maxSourceTimestamp, ResourceLimits resourceLimits, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, Time minSourceTimestamp, Time maxSourceTimestamp, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, ResourceLimits resourceLimits, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (Time minSourceTimestamp, Time maxSourceTimestamp, ResourceLimits resourceLimits, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (Time minSourceTimestamp, Time maxSourceTimestamp, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (ResourceLimits resourceLimits, Duration maxWait) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, Time minSourceTimestamp, Time maxSourceTimestamp, ResourceLimits resourceLimits, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, Time minSourceTimestamp, Time maxSourceTimestamp, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, ResourceLimits resourceLimits, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (Duration maxWait) throws TimeoutException |

| This operation is intended only for DataReader entities for which org.omg.dds.core.policy.Durability#getKind() is not org.omg.dds.core.policy.Durability.Kind#VOLATILE. More... | |

| void | waitForHistoricalData (String filterExpression, List< String > filterParameters, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (long maxWait, TimeUnit unit) throws TimeoutException |

| This operation is intended only for DataReader entities for which org.omg.dds.core.policy.Durability#getKind() is not org.omg.dds.core.policy.Durability.Kind#VOLATILE. More... | |

| void | waitForHistoricalData (Time minSourceTimestamp, Time maxSourceTimestamp, ResourceLimits resourceLimits, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (Time minSourceTimestamp, Time maxSourceTimestamp, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

| void | waitForHistoricalData (ResourceLimits resourceLimits, long maxWait, TimeUnit unit) throws TimeoutException |

| This operation will block the application thread until all historical data that matches the supplied conditions is received. More... | |

Definition at line 30 of file DataReader.java.

|

inherited |

Cast this data reader to the given type, or throw an exception if the cast fails.

| <OTHER> | The type of the data subscribed to by this reader, according to the caller. |

| ClassCastException | if the cast fails |

|

abstractinherited |

Halt communication and dispose the resources held by this Entity.

Closing an Entity implicitly closes all of its contained objects, if any. For example, closing a Publisher also closes all of its contained DataWriters.

An Entity cannot be closed if it has any unclosed dependent objects, not including contained objects. These include the following:

The deletion of a org.omg.dds.pub.DataWriter will automatically unregister all instances. Depending on the settings of the org.omg.dds.core.policy.WriterDataLifecycle, the deletion of the DataWriter may also dispose all instances.

| PreconditionNotMetException | if close is called on an Entity with unclosed dependent object(s), not including contained objects. |

|

inherited |

This operation closes all the entities that were created by means of the "create" operations on the DataReader.

That is, it closes all contained ReadCondition and QueryCondition objects.

| org.omg.dds.core.PreconditionNotMetException | if the any of the contained entities is in a state where it cannot be closed. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation creates a QueryCondition.

The returned QueryCondition will be attached and belong to the DataReader. It will trigger on any sample state, view state, or instance state.

The selection of the content is done using the queryExpression with parameters queryParameters.

Example:

This example assumes a Subscriber called "subscriber" and DataReader named "fooDr" has been created

and a datatype called Foo is present: class Foo { int age; String name; };

// Create a QueryCondition

// And apply a condition that only samples with age > 0 and name equals BILL are shown.

String expr = "age > %0 AND name = %1");

List<String> params = new ArrayList<String>();

params.add("0");

params.add("BILL");

QueryCondition<Foo> queryCond = fooDr.createQueryCondition(expr,params);

| queryExpression | The returned condition will only trigger on samples that pass this content-based filter expression. |

| queryParameters | A set of parameter values for the queryExpression. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation creates a QueryCondition.

The returned QueryCondition will be attached and belong to the DataReader. It will trigger on any sample state, view state, or instance state.

The selection of the content is done using the queryExpression with parameters queryParameters.

Example:

This example assumes a Subscriber called "subscriber" and DataReader named "fooDr" has been created

and a datatype called Foo is present: class Foo { int age; String name; };

// Create a QueryCondition

// And apply a condition that only samples with age > 0 and name equals BILL are shown.

String expr = "age > %0 AND name = %1");

QueryCondition<Foo> queryCond = fooDr.createQueryCondition(expr,"0","BILL");

| queryExpression | The returned condition will only trigger on samples that pass this content-based filter expression. |

| queryParameters | A set of parameter values for the queryExpression. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation creates a QueryCondition.

The returned QueryCondition will be attached and belong to the DataReader.

The selection of the content is done using the queryExpression with parameters queryParameters.

State Masks

The result of the QueryCondition also depends on the selection of samples determined by three org.omg.dds.sub.Subscriber.DataState masks:

Example:

This example assumes a Subscriber called "subscriber" and DataReader named "fooDr" has been created

and a datatype called Foo is present: class Foo { int age; String name; };

// Create a QueryCondition with a DataState with a sample state of not read, new view state and alive instance state.

// And apply a condition that only samples with age > 0 and name equals BILL are shown.

DataState ds = subscriber.createDataState();

ds = ds.with(SampleState.NOT_READ)

.with(ViewState.NEW)

.with(InstanceState.ALIVE);

String expr = "age > %0 AND name = %1");

List<String> params = new ArrayList<String>();

params.add("0");

params.add("BILL");

QueryCondition<Foo> queryCond = fooDr.createQueryCondition(ds,expr,params);

| states | The returned condition will only trigger on samples with one of these sample states, view states, and instance states. |

| queryExpression | The returned condition will only trigger on samples that pass this content-based filter expression. |

| queryParameters | A set of parameter values for the queryExpression. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation creates a QueryCondition.

The returned QueryCondition will be attached and belong to the DataReader.

The selection of the content is done using the queryExpression with parameters queryParameters.

State Masks

The result of the QueryCondition also depends on the selection of samples determined by three org.omg.dds.sub.Subscriber.DataState masks:

Example:

This example assumes a Subscriber called "subscriber" and DataReader named "fooDr" has been created

and a datatype called Foo is present: class Foo { int age; String name; };

// Create a QueryCondition with a DataState with a sample state of not read, new view state and alive instance state.

// And apply a condition that only samples with age > 0 and name equals BILL are shown.

DataState ds = subscriber.createDataState();

ds = ds.with(SampleState.NOT_READ)

.with(ViewState.NEW)

.with(InstanceState.ALIVE);

String expr = "age > %0 AND name = %1");

QueryCondition<Foo> queryCond = fooDr.createQueryCondition(ds,expr,"0","BILL");

| states | The returned condition will only trigger on samples with one of these sample states, view states, and instance states. |

| queryExpression | The returned condition will only trigger on samples that pass this content-based filter expression. |

| queryParameters | A set of parameter values for the queryExpression. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation creates a ReadCondition.

The returned ReadCondition will be attached and belong to the DataReader.

State Masks

The result of the ReadCondition depends on the selection of samples determined by three org.omg.dds.sub.Subscriber.DataState masks:

Example:

This example assumes a Subscriber called "subscriber" and DataReader named "fooDr" has been created

and a datatype called Foo is present: class Foo { int age; String name; };

// Create a ReadCondition with a DataState with a sample state of not read, new view state and alive instance state.

DataState ds = subscriber.createDataState();

ds = ds.with(SampleState.NOT_READ)

.with(ViewState.NEW)

.with(InstanceState.ALIVE);

ReadCondition<Foo> readCond = fooDr.createReadCondition(ds);

| states | The returned condition will only trigger on samples with one of these sample states, view states, and instance states. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation enables the Entity.

Entity objects can be created either enabled or disabled. This is controlled by the value of the org.omg.dds.core.policy.EntityFactory on the corresponding factory for the Entity.

The default setting of org.omg.dds.core.policy.EntityFactory is such that, by default, it is not necessary to explicitly call enable on newly created entities.

The enable operation is idempotent. Calling enable on an already enabled Entity has no effect.

If an Entity has not yet been enabled, the following kinds of operations may be invoked on it:

Other operations may explicitly state that they may be called on disabled entities; those that do not will fail with org.omg.dds.core.NotEnabledException.

It is legal to delete an Entity that has not been enabled by calling close(). Entities created from a factory that is disabled are created disabled regardless of the setting of org.omg.dds.core.policy.EntityFactory.

Calling enable on an Entity whose factory is not enabled will fail with org.omg.dds.core.PreconditionNotMetException.

If org.omg.dds.core.policy.EntityFactory#isAutoEnableCreatedEntities() is true, the enable operation on the factory will automatically enable all entities created from the factory.

The Listeners associated with an entity are not called until the entity is enabled. org.omg.dds.core.Conditions associated with an entity that is not enabled are "inactive," that is, have a triggerValue == false.

In addition to the general description, the enable operation on a org.omg.dds.sub.Subscriber has special meaning in specific usecases. This applies only to Subscribers with PresentationQoS coherent-access set to true with access-scope set to group.

In this case the subscriber is always created in a disabled state, regardless of the factory's auto-enable created entities setting. While the subscriber remains disabled, DataReaders can be created that will participate in coherent transactions of the subscriber.

See org.omg.dds.sub.Subscriber#beginAccess() and org.omg.dds.sub.Subscriber#endAccess() for more information.

All DataReaders will also be created in a disabled state. Coherency with group access-scope requires data to be delivered as a transaction, atomically, to all eligible readers. Therefore data should not be delivered to any single DataReader immediately after it's created, as usual, but only after the application has finished creating all DataReaders for a given Subscriber. At this point, the application should enable the Subscriber which in turn enables all its DataReaders.

Note that for a DataWriter which has a corresponding Publisher with a PresentationQoS with coherent-access set to true and access-scope set to topic or group that the HistoryQoS of the DataWriter should be set to KEEP_ALL otherwise the enable operation will fail. See org.omg.dds.pub.Publisher#createDataWriter(Topic, DataWriterQos, DataWriterListener, Collection)

|

inherited |

|

inherited |

This operation can be used to retrieve the instance key that corresponds to an instance handle.

The operation will only fill the fields that form the key inside the keyHolder instance.

| keyHolder | a container, into which this method shall place its result. |

| handle | a handle indicating the instance whose value this method should get. |

| IllegalArgumentException | if the org.omg.dds.core.InstanceHandle does not correspond to an existing data object known to the DataReader. If the implementation is not able to check invalid handles, then the result in this situation is unspecified. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.DataReaderImpl< TYPE >.

|

inherited |

This operation can be used to retrieve the instance key that corresponds to an instance handle.

The operation will only fill the fields that form the key inside the keyHolder instance.

| handle | a handle indicating the instance whose value this method should get. |

| IllegalArgumentException | if the org.omg.dds.core.InstanceHandle does not correspond to an existing data object known to the DataReader. If the implementation is not able to check invalid handles, then the result in this situation is unspecified. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.DataReaderImpl< TYPE >.

|

inherited |

This operation allows access to the existing Listener attached to the Entity.

|

inherited |

This operation obtains the LivelinessChangedStatus object of the DataReader.

This object contains the information whether the liveliness of one or more DataWriter objects that were writing instances read by the DataReader has changed. In other words, some DataWriter have become "alive" or "not alive".

The LivelinessChangedStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation retrieves information on a publication that is currently "associated" with the DataReader; that is, a publication with a matching org.omg.dds.topic.Topic and compatible QoS that the application has not indicated should be "ignored" by means of org.omg.dds.domain.DomainParticipant#ignorePublication(InstanceHandle).

The operation getMatchedPublications() can be used to find the publications that are currently matched with the DataReader.

| publicationHandle | a handle to the publication, the data of which is to be retrieved. |

| IllegalArgumentException | if the publicationHandle does not correspond to a publication currently associated with the DataReader. |

| UnsupportedOperationException | if the infrastructure does not hold the information necessary to fill in the publicationData. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| UnsupportedOperationException | if the infrastructure does not hold the information necessary to fill in the publicationnData. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation retrieves the list of publications currently "associated" with the DataReader; that is, publications that have a matching org.omg.dds.topic.Topic and compatible QoS that the application has not indicated should be "ignored" by means of org.omg.dds.domain.DomainParticipant#ignorePublication(InstanceHandle).

The handles returned in the 'publicationHandles' list are the ones that are used by the DDS implementation to locally identify the corresponding matched DataWriter entities. These handles match the ones that appear in org.omg.dds.sub.Sample#getInstanceHandle() when reading the "DCPSPublications" built-in topic.

Be aware that since an instance handle is an opaque datatype, it does not necessarily mean that the handles obtained from the getMatchedPublications operation have the same value as the ones that appear in the instanceHandle field of the SampleInfo when retrieving the subscription info through corresponding "DCPSPublications" built-in reader. You can't just compare two handles to determine whether they represent the same publication. If you want to know whether two handles actually do represent the same subscription, use both handles to retrieve their corresponding PublicationBuiltinTopicData samples and then compare the key field of both samples.

The operation may fail with an UnsupportedOperationException if the infrastructure does not locally maintain the connectivity information.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| UnsupportedOperationException | if the infrastructure does not hold the information necessary to fill in the publicationnData. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

Implements org.omg.dds.core.DomainEntity< LISTENER extends EventListener, QOS extends EntityQos<?>.

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation allows access to the existing set of QoS policies for the Entity.

This operation must be provided by each of the derived Entity classes (org.omg.dds.domain.DomainParticipant, org.omg.dds.topic.Topic, org.omg.dds.pub.Publisher, org.omg.dds.pub.DataWriter, org.omg.dds.sub.Subscriber, org.omg.dds.sub.DataReader) so that the policies meaningful to the particular Entity are retrieved.

|

inherited |

This operation obtains the RequestedDeadlineMissedStatus object of the DataReader.

This object contains the information whether the deadline that the DataReader was expecting through its DeadlineQosPolicy was not respected for a specific instance.

The RequestedDeadlineMissedStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation obtains the RequestedIncompatibleQosStatus object of the DataReader.

This object contains the information whether a QosPolicy setting was incompatible with the offered QosPolicy setting. The Request/Offering mechanism is applicable between the DataWriter and the DataReader. If the QosPolicy settings between DataWriter and DataReader are inconsistent, no communication between them is established. In addition the DataWriter will be informed via a RequestedIncompatibleQos status change and the DataReader will be informed via an OfferedIncompatibleQos status change.

The RequestedIncompatibleQosStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation obtains the SampleLostStatus object of the DataReader.

This object contains information whether samples have been lost. This only applies when the ReliabilityQosPolicy is set to RELIABLE. If the ReliabilityQosPolicy is set to BEST_EFFORT the Data Distribution Service will not report the loss of samples.

The SampleLostStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation obtains the SampleRejectedStatus object of the DataReader.

This object contains the information whether a received sample has been rejected. Samples may be rejected by the DataReader when it runs out of resourceLimits to store incoming samples. Usually this means that old samples need to be 'consumed' (for example by 'taking' them instead of 'reading' them) to make room for newly incoming samples.

The SampleRejectedStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation retrieves the list of communication statuses in the Entity that are 'triggered.

' That is, the list of statuses whose value has changed since the last time the application read the status.

When the entity is first created or if the entity is not enabled, all communication statuses are in the "untriggered" state so the list returned will be empty.

The list of statuses returned refers to the statuses that are triggered on the Entity itself and does not include statuses that apply to contained entities.

|

inherited |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation obtains the SubscriptionMatchedStatus object of the DataReader.

This object contains the information whether a new match has been discovered for the current subscription, or whether an existing match has ceased to exist. This means that the status represents that either a DataWriter object has been discovered by the DataReader with the same Topic and a compatible Qos, or that a previously discovered DataWriter has ceased to be matched to the current DataReader. A DataWriter may cease to match when it gets deleted, when it changes its Qos to a value that is incompatible with the current DataReader or when either the DataReader or the DataWriter has chosen to put its matching counterpart on its ignore-list using the ignorePublication or ignoreSubcription operations on the DomainParticipant. The operation may fail if the infrastructure does not hold the information necessary to fill in the SubscriptionMatchedStatus. This is the case when OpenSplice is configured not to maintain discovery information in the Networking Service. (See the description for the NetworkingService/Discovery/enabled property in the Deployment Manual for more information about this subject.) In this case the operation will return an UnsupportedOperationException.

The SubscriptionMatchedStatus can also be monitored using a DataReaderListener or by using the associated StatusCondition.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation takes as a parameter an instance and returns a handle that can be used in subsequent operations that accept an instance handle as an argument.

The instance parameter is only used for the purpose of examining the fields that define the key.

This operation does not register the instance in question. If the instance has not been previously registered, or if for any other reason the Service is unable to provide an instance handle, the Service will return a nil handle.

| keyHolder | a sample of the instance whose handle this method should look up. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.DataReaderImpl< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like read(Selector) except that the collection of returned samples is not constrained by any Selector. These samples contain the actual data and meta information.

Invalid Data

Some elements in the returned sequence may not have valid data: the valid_data field in the SampleInfo indicates whether the corresponding data value contains any meaningful data. If not, the data value is just a 'dummy' sample for which only the keyfields have been assigned. It is used to accompany the SampleInfo that communicates a change in the instanceState of an instance for which there is no 'real' sample available.

For example, when an application always 'takes' all available samples of a particular instance, there is no sample available to report the disposal of that instance. In such a case the DataReader will insert a dummy sample into the data values sequence to accompany the SampleInfo element that communicates the disposal of the instance.

The act of reading a sample sets its sample_state to SampleState.READ. If the sample belongs to the most recent generation of the instance, it also sets the viewState of the instance to ViewState.NOT_NEW. It does not affect the instanceState of the instance.

Example:

// Read samples

Iterator<Sample<Foo>> samples = fooDr.read();

// Process each Sample and print its name and production time.

while (samples.hasNext()) {

Sample sample = samples.next();

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp(); // meta information

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

The returned samples will be limited by the given Selector. The setting of the org.omg.dds.core.policy.Presentation may impose further limits on the returned samples.

In any case, the relative order between the samples of one instance is consistent with the org.omg.dds.core.policy.DestinationOrder:

In addition to the sample data, the read operation also provides sample meta-information ("sample info"). See org.omg.dds.sub.Sample.

The returned samples are "loaned" by the DataReader. The use of this variant allows for zero-copy (assuming the implementation supports it) access to the data and the application will need to "return the loan" to the DataReader using the Sample.Iterator#close() operation.

Some elements in the returned collection may not have valid data. If the instance state in the Sample is org.omg.dds.sub.InstanceState#NOT_ALIVE_DISPOSED or org.omg.dds.sub.InstanceState#NOT_ALIVE_NO_WRITERS, then the last sample for that instance in the collection, that is, the one with org.omg.dds.sub.Sample#getSampleRank() == 0, does not contain valid data. Samples that contain no data do not count towards the limits imposed by the org.omg.dds.core.policy.ResourceLimits.

The act of reading a sample sets its sample state to org.omg.dds.sub.SampleState#READ. If the sample belongs to the most recent generation of the instance, it will also set the view state of the instance to org.omg.dds.sub.ViewState#NOT_NEW. It will not affect the instance state of the instance.

If the DataReader has no samples that meet the constraints, the return value will be a non-null iterator that provides no samples.

Invalid Data

Some elements in the returned sequence may not have valid data: the valid_data field in the SampleInfo indicates whether the corresponding data value contains any meaningful data. If not, the data value is just a 'dummy' sample for which only the keyfields have been assigned. It is used to accompany the SampleInfo that communicates a change in the instanceState of an instance for which there is no 'real' sample available.

For example, when an application always 'takes' all available samples of a particular instance, there is no sample available to report the disposal of that instance. In such a case the DataReader will insert a dummy sample into the data values sequence to accompany the SampleInfo element that communicates the disposal of the instance.

The act of reading a sample sets its sample_state to SampleState.READ. If the sample belongs to the most recent generation of the instance, it also sets the viewState of the instance to ViewState.NOT_NEW. It does not affect the instanceState of the instance.

Example:

// create a dataState that only reads not read, new and alive samples

DataState ds = subscriber.createDataState();

ds = ds.with(SampleState.NOT_READ)

.with(ViewState.NEW)

.with(InstanceState.ALIVE);

// Read samples through an selector with the set dataState

Selector<Foo> query = fooDr.select();

query.dataState(ds);

Iterator<Sample<Foo>> samples = fooDr.read(query);

// Process each Sample and print its name and production time.

while (samples.hasNext()) {

Sample sample = samples.next();

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp(); // meta information

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| query | a selector to be used on the read |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like read() except that the returned samples are no more than #maxSamples.

| maxSamples | The maximum number of samples to read |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like read() except that the returned samples are not "on loan" from the Service; they are deeply copied to the application.

If the number of samples read are fewer than the current length of the list, the list will be trimmed to fit the samples read. If list is null, a new list will be allocated and its size may be zero or unbounded depending upon the number of samples read. If there are no samples, the list reference will be non-null and the list will contain zero samples.

The read operation will copy the data and meta-information into the elements already inside the given collection, overwriting any samples that might already be present. The use of this variant forces a copy but the application can control where the copy is placed and the application will not need to "return the loan."

// Read samples and store them in a pre allocated collection

List<Sample<Foo>> samples = new ArrayList<Sample<Foo>>();

fooDr.read(samples);

// Process each Sample and print its name and production time.

for (Sample<Foo> sample : samples) {

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp();

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| samples | A pre allocated collection to store the samples in |

samples, for convenience. | org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like read(Selector) except that the returned samples are not "on loan" from the Service; they are deeply copied to the application.

The number of samples are specified as the minimum of Selector#getMaxSamples() and the length of the list. If the number of samples read are fewer than the current length of the list, the list will be trimmed to fit the samples read. If list is null, a new list will be allocated and its size may be zero or unbounded depending upon the number of samples read. If there are no samples, the list reference will be non-null and the list will contain zero samples.

The read operation will copy the data and meta-information into the elements already inside the given collection, overwriting any samples that might already be present. The use of this variant forces a copy but the application can control where the copy is placed and the application will not need to "return the loan."

| samples | A pre allocated collection to store the samples in |

| selector | A selector to be used on the read |

samples, for convenience.| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

|

inherited |

This operation copies the next, non-previously accessed sample from this DataReader.

The implied order among the samples stored in the DataReader is the same as for read(List, Selector).

This operation is semantically equivalent to read(List, Selector) where Selector#getMaxSamples() is 1, Selector#getDataState() followed by Subscriber.DataState#getSampleStates() == org.omg.dds.sub.SampleState#NOT_READ, Selector#getDataState() followed by Subscriber.DataState#getViewStates() contains all view states, and Selector#getDataState() followed by Subscriber.DataState#getInstanceStates() contains all instance states.

This operation provides a simplified API to "read" samples avoiding the need for the application to manage iterators and specify queries.

If there is no unread data in the DataReader, the operation will return false and the provided sample is not modified.

| sample | A valid sample that will contain the new data. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.DataReaderImpl< TYPE >.

|

inherited |

Indicates that references to this object may go out of scope but that the application expects to look it up again later.

Therefore, the Service must consider this object to be still in use and may not close it automatically.

|

inherited |

Provides a Selector that can be used to refine what read or take methods return.

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation installs a Listener on the Entity.

The listener will only be invoked on all communication statuses pertaining to the concrete type of this entity.

It is permitted to use null as the value of the listener. The null listener behaves as a Listener whose operations perform no action.

Only one listener can be attached to each Entity. If a listener was already set, the operation will replace it with the new one. Consequently if the value null is passed for the listener parameter, any existing listener will be removed.

| listener | the listener to attach. |

|

inherited |

This operation installs a Listener on the Entity.

The listener will only be invoked on the changes of communication status indicated by the specified mask.

It is permitted to use null as the value of the listener. The null listener behaves as a Listener whose operations perform no action.

Only one listener can be attached to each Entity. If a listener was already set, the operation will replace it with the new one. Consequently if the value null is passed for the listener parameter, any existing listener will be removed.

|

inherited |

This operation installs a Listener on the Entity.

The listener will only be invoked on the changes of communication status indicated by the specified mask.

It is permitted to use null as the value of the listener. The null listener behaves as a Listener whose operations perform no action.

Only one listener can be attached to each Entity. If a listener was already set, the operation will replace it with the new one. Consequently if the value null is passed for the listener parameter, any existing listener will be removed.

|

inherited |

This operation is used to set the QoS policies of the Entity.

This operation must be provided by each of the derived Entity classes (org.omg.dds.domain.DomainParticipant, org.omg.dds.topic.Topic, org.omg.dds.pub.Publisher, org.omg.dds.pub.DataWriter, org.omg.dds.sub.Subscriber, org.omg.dds.sub.DataReader) so that the policies that are meaningful to each Entity can be set.

The set of policies specified as the parameter are applied on top of the existing QoS, replacing the values of any policies previously set.

Certain policies are "immutable"; they can only be set at Entity creation time, or before the entity is made enabled. If setQos is invoked after the Entity is enabled and it attempts to change the value of an "immutable" policy, the operation will fail with org.omg.dds.core.ImmutablePolicyException.

Certain values of QoS policies can be incompatible with the settings of the other policies. The setQos operation will also fail if it specifies a set of values that once combined with the existing values would result in an inconsistent set of policies. In this case, it shall fail with org.omg.dds.core.InconsistentPolicyException.

If the application supplies a non-default value for a QoS policy that is not supported by the implementation of the service, the setQos operation will fail with UnsupportedOperationException.

The existing set of policies are only changed if the setQos operation succeeds. In all other cases, none of the policies is modified.

| ImmutablePolicyException | if an immutable policy changes its value. |

| InconsistentPolicyException | if a combination of policies is inconsistent with one another. |

| UnsupportedOperationException | if an unsupported policy has a non-default value. |

|

inherited |

This operation accesses a collection of samples from this DataReader.

The behavior is identical to read except for that the samples are removed from the DataReader.

Invalid Data

Some elements in the returned sequence may not have valid data: the valid_data field in the SampleInfo indicates whether the corresponding data value contains any meaningful data. If not, the data value is just a 'dummy' sample for which only the keyfields have been assigned. It is used to accompany the SampleInfo that communicates a change in the instanceState of an instance for which there is no 'real' sample available.

For example, when an application always 'takes' all available samples of a particular instance, there is no sample available to report the disposal of that instance. In such a case the DataReader will insert a dummy sample into the data values sequence to accompany the SampleInfo element that communicates the disposal of the instance.

Example:

// Take samples

Iterator<Sample<Foo>> samples = fooDr.take();

// Process each Sample and print its name and production time.

while (samples.hasNext()) {

Sample sample = samples.next();

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp(); // meta information

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like take(Selector) except that the collection of returned samples is not constrained by any Selector.

The number of samples accessible via the iterator will not be more than #maxSamples.

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

The number of samples returned is controlled by the org.omg.dds.core.policy.Presentation and other factors using the same logic as for read(Selector).

The act of taking a sample removes it from the DataReader so it cannot be "read" or "taken" again. If the sample belongs to the most recent generation of the instance, it will also set the view state of the instance to org.omg.dds.sub.ViewState#NOT_NEW. It will not affect the instance state of the instance.

The behavior of the take operation follows the same rules than the read operation regarding the preconditions and postconditions for the arguments and return results. Similar to read, the take operation will "loan" elements to the application; this loan must then be returned by means of Sample.Iterator#close(). The only difference with read is that, as stated, the sample returned by take will no longer be accessible to successive calls to read or take.

If the DataReader has no samples that meet the constraints, the return value will be a non-null iterator that provides no samples.

Example:

// create a dataState that only reads not read, new and alive samples

DataState ds = subscriber.createDataState();

ds = ds.with(SampleState.NOT_READ)

.with(ViewState.NEW)

.with(InstanceState.ALIVE);

// Take samples through an selector with the set dataState

Selector<Foo> query = fooDr.select();

query.dataState(ds);

Iterator<Sample<Foo>> samples = fooDr.take(query);

// Process each Sample and print its name and production time.

while (samples.hasNext()) {

Sample sample = samples.next();

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp(); // meta information

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| query | a selector to be used on the read |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like take() except that the returned samples are not "on loan" from the Service; they are deeply copied to the application.

The take operation will copy the data and meta-information into the elements already inside the given collection, overwriting any samples that might already be present. The use of this variant forces a copy but the application can control where the copy is placed and the application will not need to "return the loan."

// Take samples and store them in a pre allocated collection

List<Sample<Foo>> samples = new ArrayList<Sample<Foo>>();

fooDr.take(samples);

// Process each Sample and print its name and production time.

for (Sample<Foo> sample : samples) {

Foo foo = sample.getData();

if (foo != null) { //Check if the sample is valid.

Time t = sample.getSourceTimestamp();

System.out.println("Name: " + foo.myName + " is produced at " + time.getTime(TimeUnit.SECONDS));

}

}

| samples | A pre allocated collection to store the samples in |

samples, for convenience. | org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.AbstractDataReader< TYPE >.

|

inherited |

This operation accesses a collection of samples from this DataReader.

It behaves exactly like take(Selector) except that the returned samples are not "on loan" from the Service; they are deeply copied to the application.

The take operation will copy the data and meta-information into the elements already inside the given collection, overwriting any samples that might already be present. The use of this variant forces a copy but the application can control where the copy is placed and the application will not need to "return the loan."

| samples | A pre allocated collection to store the samples in |

| query | A selector to be used on the take |

samples, for convenience. | org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

|

inherited |

This operation copies the next, non-previously accessed sample from this DataReader and "removes" it from the DataReader so it is no longer accessible.

This operation is analogous to readNextSample(Sample) except for the fact that the sample is "removed" from the DataReader.

This operation is semantically equivalent to take(List, Selector) where Selector#getMaxSamples() is 1, Selector#getDataState() followed by Subscriber.DataState#getSampleStates() == org.omg.dds.sub.SampleState#NOT_READ, Selector#getDataState() followed by Subscriber.DataState#getViewStates() contains all view states, and Selector#getDataState() followed by Subscriber.DataState#getInstanceStates() contains all instance states.

This operation provides a simplified API to "take" samples avoiding the need for the application to manage iterators and specify queries.

If there is no unread data in the DataReader, the operation will return false and the provided sample is not modified.

| sample | A valid sample that will contain the new data. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

Implemented in org.opensplice.dds.sub.DataReaderImpl< TYPE >.

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | String | filterExpression, |

| List< String > | filterParameters, | ||

| Time | minSourceTimestamp, | ||

| Time | maxSourceTimestamp, | ||

| ResourceLimits | resourceLimits, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| filterExpression | the SQL expression (subset of SQL), which defines the filtering criteria (null when no SQL filtering is needed). |

| filterParameters | sequence of strings with the parameter values used in the SQL expression (i.e., the number of n tokens in the expression). The number of values in filterParameters must be equal to or greater than the highest referenced n token in the filterExpression (e.g. if %1 and %8 are used as parameters in the filterExpression, the filterParameters should contain at least n + 1 = 9 values). |

| minSourceTimestamp | Filter out all data published before this time. The special org.omg.dds.core.Time.invalidTime() can be used when no minimum filter is needed. |

| maxSourceTimestamp | Filter out all data published after this time. The special org.omg.dds.core.Time.invalidTime() can be used when no maximum filter is needed |

| resourceLimits | Specifies limits on the maximum amount of historical data that may be received. |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | String | filterExpression, |

| List< String > | filterParameters, | ||

| Time | minSourceTimestamp, | ||

| Time | maxSourceTimestamp, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| filterExpression | the SQL expression (subset of SQL), which defines the filtering criteria (null when no SQL filtering is needed). |

| filterParameters | sequence of strings with the parameter values used in the SQL expression (i.e., the number of n tokens in the expression). The number of values in filterParameters must be equal to or greater than the highest referenced n token in the filterExpression (e.g. if %1 and %8 are used as parameters in the filterExpression, the filterParameters should contain at least n + 1 = 9 values). |

| minSourceTimestamp | Filter out all data published before this time. The special org.omg.dds.core.Time.invalidTime() can be used when no minimum filter is needed. |

| maxSourceTimestamp | Filter out all data published after this time. The special org.omg.dds.core.Time.invalidTime() can be used when no maximum filter is needed |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | String | filterExpression, |

| List< String > | filterParameters, | ||

| ResourceLimits | resourceLimits, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| filterExpression | the SQL expression (subset of SQL), which defines the filtering criteria (null when no SQL filtering is needed). |

| filterParameters | sequence of strings with the parameter values used in the SQL expression (i.e., the number of n tokens in the expression). The number of values in filterParameters must be equal to or greater than the highest referenced n token in the filterExpression (e.g. if %1 and %8 are used as parameters in the filterExpression, the filterParameters should contain at least n + 1 = 9 values). |

| resourceLimits | Specifies limits on the maximum amount of historical data that may be received. |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | String | filterExpression, |

| List< String > | filterParameters, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| filterExpression | the SQL expression (subset of SQL), which defines the filtering criteria (null when no SQL filtering is needed). |

| filterParameters | sequence of strings with the parameter values used in the SQL expression (i.e., the number of n tokens in the expression). The number of values in filterParameters must be equal to or greater than the highest referenced n token in the filterExpression (e.g. if %1 and %8 are used as parameters in the filterExpression, the filterParameters should contain at least n + 1 = 9 values). |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | Time | minSourceTimestamp, |

| Time | maxSourceTimestamp, | ||

| ResourceLimits | resourceLimits, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| minSourceTimestamp | Filter out all data published before this time. The special org.omg.dds.core.Time.invalidTime() can be used when no minimum filter is needed. |

| maxSourceTimestamp | Filter out all data published after this time. The special org.omg.dds.core.Time.invalidTime() can be used when no maximum filter is needed |

| resourceLimits | Specifies limits on the maximum amount of historical data that may be received. |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | Time | minSourceTimestamp, |

| Time | maxSourceTimestamp, | ||

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.

Additionally, when creating the DataReader, the DurabilityQos.kind of the DataReaderQos needs to be set to org.omg.dds.core.policy.Durability.Kind#VOLATILE to ensure that historical data that potentially is available already at creation time is not immediately delivered to the DataReader at that time.

| minSourceTimestamp | Filter out all data published before this time. The special org.omg.dds.core.Time.invalidTime() can be used when no minimum filter is needed. |

| maxSourceTimestamp | Filter out all data published after this time. The special org.omg.dds.core.Time.invalidTime() can be used when no maximum filter is needed |

| maxWait | The maximum duration the application thread is blocked during this operation. |

| TimeoutException | thrown when maxWait has expired before the applicable historical data has successfully been obtained. |

| org.omg.dds.core.DDSException | An internal error has occurred. |

| org.omg.dds.core.AlreadyClosedException | The corresponding DataReader has been closed. |

| org.omg.dds.core.OutOfResourcesException | The Data Distribution Service ran out of resources to complete this operation. |

| org.omg.dds.core.PreconditionNotMetException | Can happen when requesting conditional alignment on non-volatile readers or Historical data request already in progress or complete. |

| void org.opensplice.dds.sub.DataReader< TYPE >.waitForHistoricalData | ( | ResourceLimits | resourceLimits, |

| Duration | maxWait | ||

| ) | throws TimeoutException |

This operation will block the application thread until all historical data that matches the supplied conditions is received.

This operation only makes sense when the receiving node has configured its durability service as an On_Request alignee. (See also the description of the //OpenSplice/DurabilityService/NameSpaces/Policy[] attribute in the Deployment Guide.) Otherwise the Durability Service will not distinguish between separate reader requests and still inject the full historical data set in each reader.